One of, if not perhaps the main way that your customers will find your website is through a search engine. Therefore it’s worth spending time to ensure that your website is properly indexed by search engines.

Robots.txt

Robots.txt is a simple text file that determines if a search engine is allowed to index your website, and if so what pages they are allowed to index. There are generally three components to the robots.txt file, the user-agent string, allow/disallow directories, and the sitemap location.

User-agent: this can be used to define different indexing settings for different web crawlers. If you wanted to disallow an extra directory just for the Googlebot you’d be able to add the User-Agent: Googlebot, and follow it with the directory to disallow. Otherwise, User-agent: * applies to all web crawlers.

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Disallow: /wp-includes/

Allow: /wp-includes/js/

Allow: /wp-includes/images/

Disallow: /trackback/

Disallow: /wp-login.php

Disallow: /wp-register.php

Sitemap: https://www.yoursite.com/sitemap.xmlThe above is an example of a fairly standard WordPress robots.txt. Additionally there are many plugins that will automate part of the setup process, or provide an easier way to edit the file through WordPress (by providing a virtual file). At the bottom of the file following include the Sitemap tag, followed by the location of your XML sitemap, ensure also that the sitemap isn’t blocked by the robots.txt.

Sitemaps

A sitemap is an XML file that that includes all the webpages in your site and their location. Along with other information such as the rough frequency the page will change, its last changed date, and the pages priority. There are many plugins for WordPress that can be used to generate sitemaps, along with online sitemap generators.

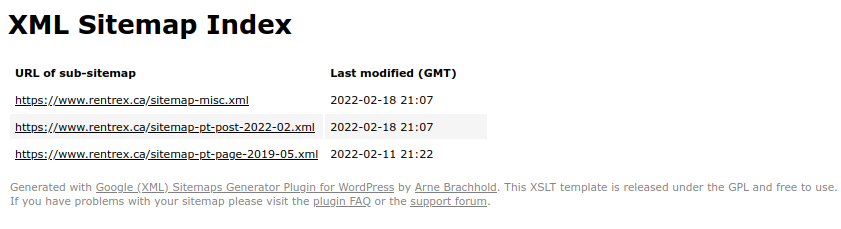

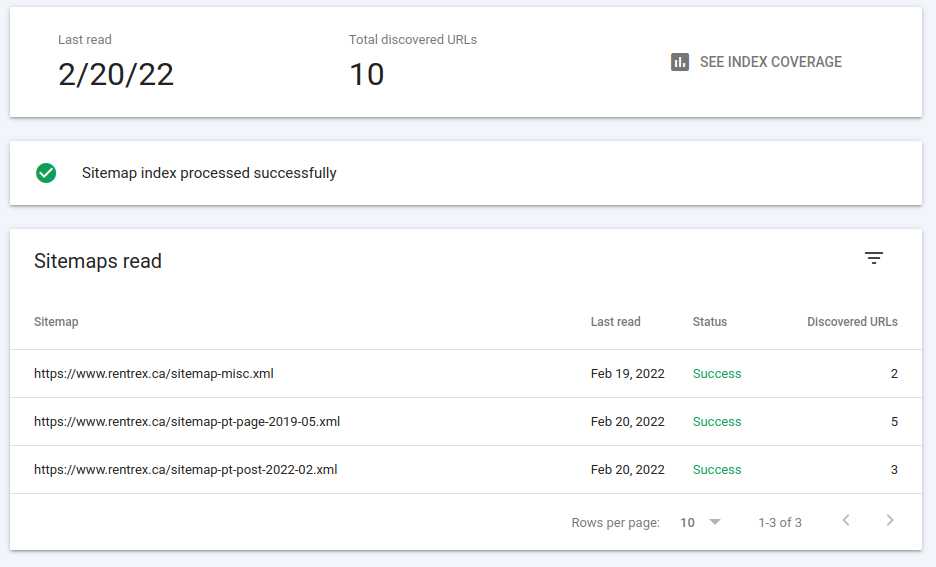

Above is the sitemap for this website, it links the a number of sub-sitemaps that include more information.

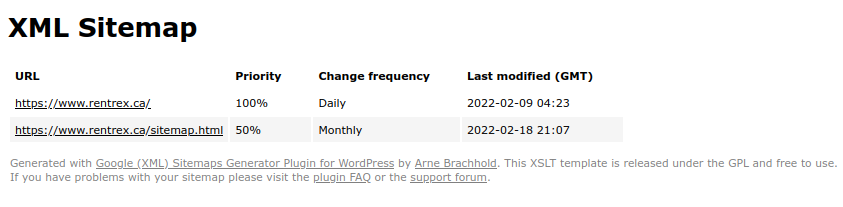

Under the sitemap-misc, is the location of the sitemap, and the homepage.

Webmasters Tools

If you haven’t already verified your domain to use the webmasters tools, follow the guide here

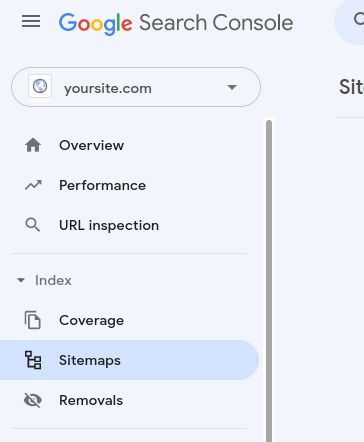

Now that our robots.txt file and our sitemap are setup we can use the webmasters tools provided by search engines to ensure that they are properly indexing our websites and processing our sitemaps. Generally there is a verification process before being able to access these tools that may required access to modify your DNS records (usually adding a CNAME or TXT record). Once you are verified you will be able to access these tools. We’ll review googles search console below, the process for Bing and others is very similar.

To confirm your sitemap has been successfully detected and parsed, navigate to the sitemaps section

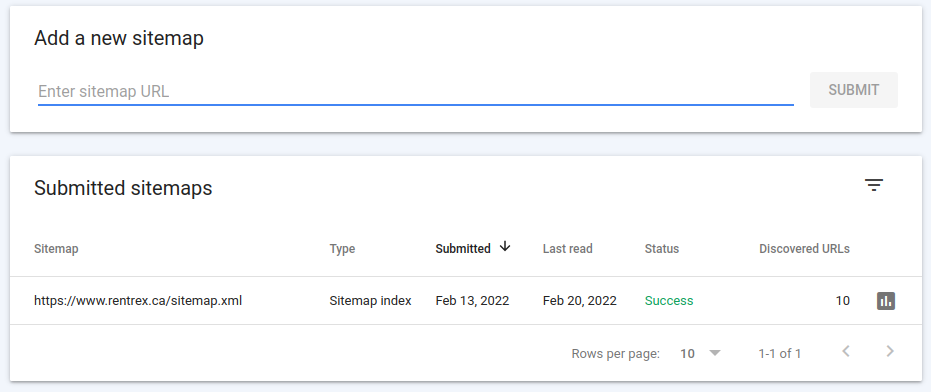

We can see above that the sitemap has been submitted and processed successfully. If your sitemap has not been processed submit it using the “Add a new sitemap” section. Next we will click on the sitemap to view more information about its processing and validate that everything has been processed correctly.

Above we can see the individual maps that have been processed, and the URLs related to them. All of them are showing as a success status, and the number of URLs discovered is consistent with how many were submitted in the map.

If you receive an error, such as a 404 from an address in your sitemap, verify that it is up to date and doesn’t contain links to any pages that no longer exist. Once it’s been corrected resubmit it so that Google and other search engines will be able to index it correctly.

Leave a Reply

You must be logged in to post a comment.